As the domain of artificial intelligence (AI) and machine learning (ML) is developing at an accelerated pace, the increased complexity and unpredictability of algorithms have elicited an important debate involving the idea of trust, accountability, and transparency. Inasmuch as such technologies are still defining industries, including the health sector and the fintech sector, the black-box nature usually makes the users oblivious of how they make the decisions.

This uninterpretability has made it challenging to utilize across the board, especially in areas where accuracy and fairness are valued most. In comes Explainable AI (XAI), a groundbreaking method that will give us light over how machine learning models make their decisions, with greater angles of understanding.

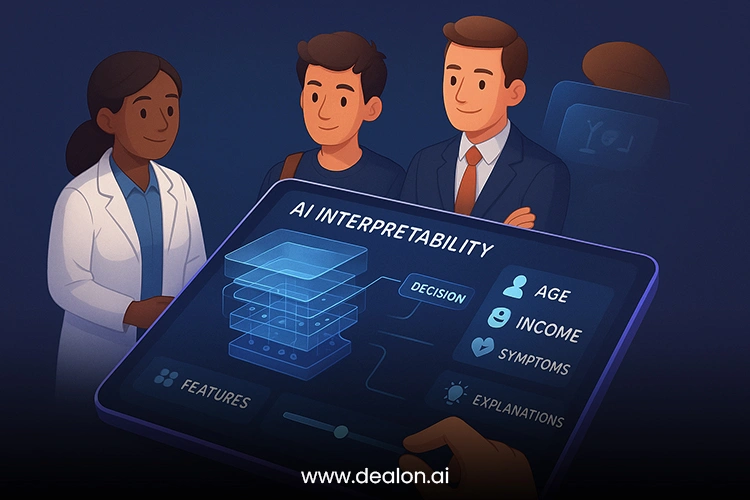

Explainable AI is not a one-dimensional technical innovation but a paradigm shift to the democratisation of AI. It is what can be described as avoiding the AI systems to exist in isolation, but in tune with human comprehension and values. XAI, through the combination of interpretability methods, including visualizations, rule-based explanations, and feature importance insights, provides stakeholders with the knowledge of how to understand the most complex models. Transparency helps to create a trustworthy environment so that AI systems will become easier to deploy to more people who are not necessarily experts in the field, regulators, and end users.

With industries deploying more machine learning into key processes and procedures, there has never been a greater cost to trust AI. Besides increasing the trustworthiness of AI, XAI also resolves essential moral issues, including issues such as bias and fairness, which might otherwise destroy the confidence of users in the AI system. This blog post will look at six powerful arguments supporting how Explainable AI is changing the field of machine learning, enhancing trust, and laying the groundwork for more responsible, equitable, and ethical AI products.

Also Read: 10 Challenges in AI Development and How to Overcome Them

Demystifying Black-box Models

The ML models are opaque, especially the deep neural networks, which are commonly referred to as black-box models. Such models are quite effective in making very accurate predictions, but their decision-making procedures cannot be (fully) understood by people. This interpretability lack is a considerable challenge, especially where it is applied to such spheres as healthcare and finance, because, depending on the decisions of AI, the course of lives of individuals may change drastically. Without transparency, users will not have confidence in how a model came to an inevitable conclusion, and this presents doubts about whether the AI-based systems are fair, reliable, and accountable.

What makes things more difficult in the issue of black-box interpretability is the complexity of these models. The number of parameters and layers in deep neural networks, such as millions, makes it more challenging to find out how they make their decisions. The capacity of clarifying as well as understanding these models not only becomes technologically vital but ethically as well, particularly when they are applied in high-stakes settings. As an example, in healthcare, AI systems are used in prescribing life-saving treatments or estimating the results of patient outcomes. Without a clear understanding of the logic behind the system, there might emerge a mistrust of its predictions and subsequent adverse outcomes to patients.

Schedule that problem is resolved by explainable AI (XAI), which helps to reveal the inner picture of these complicated models. XAI is encouraged by making the decision-making process more understandable to tech-illiterate and tech-literate stakeholders by applying several approaches, including feature importance analysis, decision boundary visualization, and model-agnostic explainability approaches.

As an example, XAI is able to reveal which features, including, for instance, age, gender, or medical history, had a significant impact on the model predictions. By giving the decision-making process smaller segments that can be understood, XAI helps to increase confidence in the user so that the user has a better grasp of why the model came up with a specific decision. Such openness will not only eradicate skepticism, but it will also lead to a more responsible attitude towards the deployment of AI in that it will be used ethically and effectively.

Empowering Accountability and Compliance

In structurally sensitive categories like healthcare, finance, and litigative areas, the decision of machine learning models should not only be correct, but also attested and explainable. AI is being deployed in organizations more and more to make crucial decisions that impact people in their lives, such as loan applications, proposing medical treatment, or handing down a legal punishment. Nevertheless, in cases where AI systems malfunction or give harmful decisions, such organizations should be capable of explaining how and why such things happen. The necessity to provide comprehensible, trackable reasons for AI choices is an essential one to achieve accountability and meet regulatory requirements.

To a great extent, explainable AI (XAI) has become an essential part of this process because it provides insights into the reasoning behind the output of each model. As an example, when an AI model denies a particular loan application, XAI can explain why, disclosing which criteria determined this decision, including income, debt-to-income ratio, or credit score.

In healthcare, XAI will be able to give knowledge of how the AI came up with its treatment options, informing the practitioner as to what symptoms/patient traits/patient histories led the AI to its suggestion. These explanations are vital to the organization in that it can be sure that it is acting within the legal and ethical norms since it has a clear audit representation in decision-making processes.

Additionally, XAI assists in reducing any liability risk to organizations. When there is a disagreement or malfunction, businesses will have the support of XAI, having the means to detect how the AI software arrived at a conclusion. This is critical, especially in areas where decision-making has significant implications in financial, legal, or personal matters.

To take an example, when a use of a medical procedure suggested by AI causes side effects, one can explain the model logic that can allow doctors, patients, and regulators to understand whether the decision taken was reasonably foreseeable. When it applies to the financial services sector, decision-making technology needs to be transparent, as this mitigates the possibility of regulatory sanctions and customer complaints because the AI-driven process is conducted in a way that is determined to be fair and transparent.

Creating Trust in the User via Interpretability

The degree of interpretability of a machine learning model is inherently connected to the level of trust. It is known that users are more than reluctant to trust a system whose decisions they cannot fathom, especially when those decisions will have consequences in the real world. In an event where people fear that the technology is too advanced or obscure, they might not trust it, even though it is giving the right results. This hesitation may prevent the popularization of AI, particularly in situations of delicate and critical decisions.

Explainable artificial intelligence (XAI) provides a solution by way of improving transparency in machine learning models. XAI explains to the user how models make a prediction in an intuitive way, usually visually, feature importance measures, or in natural language, about how decisions are made. By displaying such explanations in an easily comprehensible form, XAI narrows the distance between the sophisticated algorithms and human perception so that a user can be less concerned with the correctness of the result produced by the system.

As an example, XAI may be used in the healthcare business to show the contribution of different medical factors (age, medical history, or lifestyle) to a conclusion or a suggestion of treatment. XAI can decompose the reasons that led to a loan being approved or declined, including income, outstanding debt, and historical financial conduct. These explanations are clear, easy-to-read explanations of how the model works; they can help in demystifying the behaviour of the model being used and make users take more informed decisions based on what the AI gives them.

Encouraging Equity and Eliminating Prejudice

The potential bias and discrimination are one of the most relevant issues in machine learning, mainly in cases where the models are trained on prejudiced or unrepresentative datasets. Such biases can be unintentionally spread in the process of decision-making of the model and result in discriminatory results with a proportionally worse impact on some groups of people. It is particularly worrisome in areas such as criminal justice, recruitment processes, and lending procedures, wherein AI-based decisions might compound inequalities and discrimination in society.

Explainable AI (XAI) will provide a significant means of locating and alleviating bias in machine learning models. XAI facilitates the investigation of the features or points of data that are affecting the model by allowing its stakeholders to gain insight into how a model arrives at a decision. Such a level of insight enables organizations to identify the possible origins of bias and make re-adjustments to achieve fairness.

As an example, XAI will allow one to understand whether some of the demographic characteristics, e.g., race or gender, are overrepresented in the creation of a hiring decision or a credit score assessment. Organizations can use this information to ameliorate the situation, e.g., by adjusting the data that the model is trained on or altering the model behavior, in order to remove bias to increase fairness.

Besides, XAI contributes to the ethical applicability and species values of AI systems. XAI reduces the threat of discrimination and allows the creation of a fairer system, promoting equality. In turn, this promotes increased trust towards AI technologies among people. Due to an increased level of transparency and accountability, AI can be employed to contribute to the positive outcomes of society, including reducing discrimination, guaranteeing equitable access, and enabling ethical decision-making practices within an array of fields.

Increasing the Robustness and Trustworthiness of Models

Machine learning models have often been trained on a single training set, and when deployed in dynamic, real-life situations, these models are subjected to new, unseen information. The robustness of a model is its capacity to perform well in such erratic situations. To give one example, an AI system in autonomous driving should be capable of coping with a range of scenarios, e.g., a sudden change in weather conditions or the appearance of an obstacle on the road unexpectedly.

In the same way, an AI model applied to the financial industry must be able to learn about new changes in the economy that were not present during the training process. Under such circumstances, the effectiveness of any model greatly depends on its performance, and the reliability of the model is also dependent on the manner in which the model responds to new data.

Nevertheless, one of the issues that cross over in such cases is the fact that the visitor is not transparent in the manner in which models respond to emerging, unseen data. One can end up being suspicious of the predictions made by a model, or even in life-changing as well as significant decisions, without the knowledge of how the model reacts to changes in input.

To give an example, suppose an AI model failed to get stock market trends correctly following an unusual global event, then the stakeholders will doubt how the system will deal with other future data, which will undermine its credibility and trustworthiness. So, it is essential to explain how a model will be able to be accurate and consistent when new information appears.

Explainable AI (XAI) is important in the context of managing these issues because developers and stakeholders gain a clear sense of the behavior of models when presented with previously unseen information. XAI presents the user with informed insight as to how a model receives and generates new data, which features it pays the most attention to, and how it makes decisions concerning that information.

To give a concrete example, when an AI model in the medical field is shown a new form of medical imaging data (i.e., X-ray, MRI, etc.) XAI could be used to give an explanation about why it considered some elements of the medical image to be important when coming to a diagnosis, even though the data was something the model had never been trained on. Such transparency will guarantee that the given model will operate in a predictable way and that any updates introduced due to new data will be transparent to the end-user.

Encouraging a Human-AI partnership

Instead of thinking of AI as a substitute for decision-making, Explainable AI (XAI) advocates a future in which AI and human expertise must go hand-in-hand. Such an incentive to collaborate supports the notion that the application of artificial intelligence systems is supposed to be a tool that empowers professionals and complements their ability, but not substitutes for them. XAI also enables human experts to take on the strengths of the AI system along with their knowledge and expertise to make better, well-rounded decisions by offering simple, actionable explanations of the rationale behind AI-driven decision making.

The repercussions of decision-making are incredibly high in most professions, especially in the health, financial, and legal sectors. In such settings, practitioners use AI to perform procedures on large quantities of data and deliver suggestions, yet they do introduce their experience and judgment in the process. Take healthcare as another example, physicians can use the support of AI-based systems to facilitate the detection of a disease via medical images or genetic information.

AI may provide a list of possible diagnoses, and the doctor is supposed to confirm it and make decisions on further steps according to AI suggestions and his or her clinical practice. This leaves no doubt that the verdict made is an informed one, based on the accuracy of the model as well as the elaborate knowledge of the human analyst.

Explanations presented by XAI are essential to this cooperation because they will make sure that all recommendations made by AI can be judged and supported by sophisticated explanations. XAI allows medical experts to understand how data points and features mattered to the model by offering a transparent explanation of a diagnosis or recommendation data point instead of the opaque output.

As an example, the AI may provide the rationale that some regularity in a med scan or some genetic indicators have been taken into consideration in the diagnosis, so the physician will be able to gauge whether such points of consideration conform to their personal observations and medical experience. This results in recommendations by AI being more transparent and credible, and the healthcare professional is assured that he/ she can safely depend on the insights provided by the AI in making their final decision on healthcare provision.

Conclusion

XAI makes building complex models comprehensible so that decisions can be comprehended, therefore maintaining usefulness, reliability, and confidence. It helps promote accountability in controlled industries where there is adherence to ethical and legal principles. XAI is also related to the bias issue, as it facilitates equity, as it is able to provide an explanation regarding decision-making. In addition, thanks to promoting collaboration between people and AI, XAI enables specialists to make adequate decisions by integrating both the accuracy of AI and human experience. Finally, XAI will generate a more ethical, trustworthy, and transparent AI environment, resulting in the ethical use of technologies.