Artificial intelligence has become one of the driving forces of innovation in the broad contexts of industry, healthcare, and entertainment. Despite the positive strides being made using AI, the deepfake technology has generated a lot of controversy and debate, which touches on privacy, ethics, and the formulation of an identity. AI deepfake portrays an ethical inquiry whilst being at the epicenter of marvels in technology.

The crux of the matter centers on the real ability to alter actuality—the ability to synthesize and produce a so-called deepfake. The extent of the possible manipulation includes precise archives within the bounds of the given element. It is possible to deeply fake an individual and produce a realistic portrayal of them. The phenomenon has already passed the boundary of entertainment and has comfortably found a home in identity theft, cyber exploitation, and misuse of information technology. The most shocking element is how simple, and at the same time, alarming, the ability to funnel this technology is.

Concerns about deepfakes revolve primarily around the loss of trust, as well as an increasing manipulation of images and videos created by composite media at the hands of advanced deep learning and machine learning technology. If deepfakes continue to garner primary focus, the essence of privacy of media and how bounds of data privacy are constantly being pushed will be an issue that approaches insurmountable risk, not just to the deontological framework and principles, but to the very control of the individual and data privacy that surrounds one’s digital self, which remains vastly unregulated.

This text seeks to analyze the unequally disruptive consequences of deepfakes and the loss of data privacy, the absence of deepfakes, and the bordering and ethical efforts that are resolute to protect monetization of deepfake technology and the overarching suite of synthetic media that is to come.

Also Read: Future-Proof Your Professional Career: The AI Skills Employers Are Desperate For

What Are Deepfakes and How Do They Work?

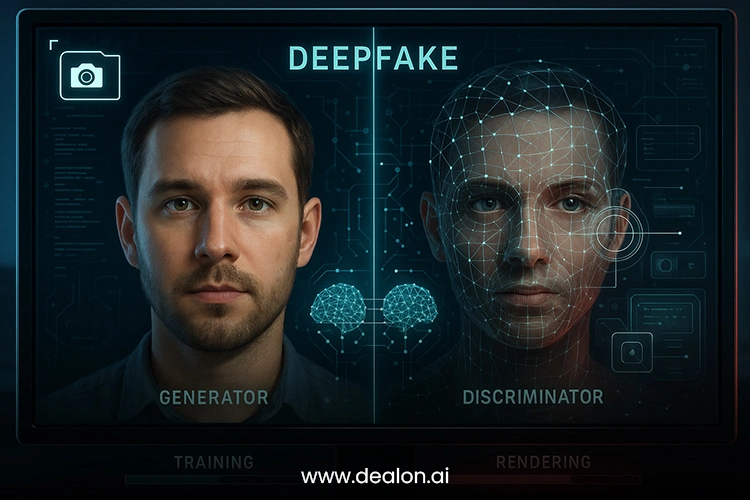

Deepfakes are an astonishing innovation in the domain of artificial intelligence, allowing media manipulation to take a menacing twist. Deepfakes are an example of artificial media generated from “deepfakes” algorithms using deep learning techniques, primarily through Generative Adversarial Networks. Central to a GAN are two artificial neural networks in fierce rivalry. One is concerned with producing content that seems indistinguishable from real-life media, while the other works to expose and identify the generated fakes. This is the core of the GAN architecture, and it is what allows artificial intelligence to create images, audios, and videos so real as to mimic an individual’s face, speech, and even body movements with terrifying precision.

Deepfakes have the power to change reality immensely in the realms of entertainment and public discourse. They are used, for instance, in the restoration of damaged videos and the digital recreation of historical figures for documentaries, as well as aiding in the enhancement of features for people with disabilities. Other fields, like gaming, still stand to benefit from increased realism and personalization of digital avatars. The other side of the radar is the concerning nature of deepfake technology; it’s dual use for malicious intentions, along with the creative endeavors in the fields of art, filmmaking, and education.

Deepfakes and Their Data Privacy Concerns

The heart of most deepfake dilemmas remains an issue of precise urgency: the violation of an individual’s right to control their personal information remains an issue. Most deepfake algorithms are trained on enormous datasets that are harvested from public-facing media, including videos, social media sites, and interviews. The effects of losing necessary controls can be severe. Thieves can go as far as creating convincing fake videos or audio of their prey using deepfakes, thus making it next to impossible to prevent. They will be able to dupe users, defraud companies, or perform cyber blackmail. Another side of this equation is financial.

The deepfake audio or video can be a reputational death blow, particularly a self-constructed autonomous weapon detonating, so to speak, at the busy intersection of the ether. The personal reputational damage is enormous. The item, even if it is proved to be fake, can be super fatal once it is unleashed, Furthermore, the audio-visual data once splashed, becomes a part of the commons, and the ether as it were, losing all concept to the originator, regarding permissions to land C. Adding to the already constructed rostrum, the situation worsens due to this deepfake technology losing safeguards to privacy.

Furthermore, deepfakes underscore essential shortcomings in the current legal data protection constructs. Existing privacy laws, such as the GDPR in the European Union, aim at protecting a person from the more traditional forms of data exploitation, such as identity theft or data breaches. These laws, however, do not concern themselves with the creation and distribution of synthetic materials. Such as in the case of deepfakes, personal likenesses can be digitally obtained, and, in some cases, used in content without the person in the content being aware of the fact, thus leaving them with no legal protection. It is the case, then, that the legal frameworks available today do not address the issues deepfakes raise about personal data protection.

Such gaps in regulation, not to mention the deepfakes themselves, also raise issues of concern in terms of global governance. The cross-border nature of deepfake content adds to the already complex regulation and enforcement laws of the technology. Standards of privacy and digital rights of different Jurisdictions exacerbate the situation. The lack of protective measures available to individuals against the misuse of their likenesses in these deepfakes is overshadowed by the inability to protect individuals from the creators of such deepfakes. It is apparent, then, that such issues are only possible to resolve through international legal cooperation.

Ethical Implications: Manipulation of Identity, Misinformation, and Consent

With the emergence of deep learning as an advanced research topic, the usage of deep learning instantiated aids to expand the variety of attributes gained from computer vision and media at a lower cost and with greater simplification. Deep fake technology first appeared as a recreational method of combining Artificial Intelligence and Machine learning. However, it brought the emergence of a deep understanding, accompanied by severe socio-political changes. Such a manipulation of the line separating the real from the fictitious first begets an infringement of the manipulation of an individual’s private domains, not to mention the likely emergence of spurious claims to the domains of misinformation.

In the realm of social media, a deep fake targeted at an individual, irrespective of his social standing, can overwrite an individual’s perception of his reality and can leave irreversible changes to the reality of socio-political standing and perceptions of the changed reality, and forge a significantly detrimental view on the reality. A direct vision of the whole democratic world can show the utter incomprehensibility of such acts. To consider, such acts lie deep-fake’s ability to easily covert and frame speeches, and public addresses on virtually any line of discourse. The only difference is that the product of these acts can be obtained without the immobilizing cost of lacking quality in a video.

A deep fake can form a definite picture in the viewers’ minds and can also aid in transporting the viewers to a realm completely divorced from reality; not just that, it will also enable them to hold on to ill-triumphed perceptions about a political figure. Such evaded tacit means of deep fakes can catalyze changing the whole line of perception about a divergent individual.

Such acts can be hidden behind the social facade of videos targeting opinions of political standing. Yet, as the effect on the commons at large becomes noticeable, it can offer means to dethrone a social structure utterly, leave the commons to a level of pessimism about the political frame, and utterly manipulate the deep, sacred trust of the people placed on the media.

Additionally, deepfakes violate informed consent, which is one of the most critical principles in ethical AI. Informed consent in the world today means more than simply accepting the terms of an agreement; it means an agreement that is fully supported with the knowledge of one’s portrayal, voice, and identity being used.

Deepfakes, particularly those constructed without the knowledge and consent of the subject, are an outrageous violation of this principle. In the case of deepfake pornography or any manipulated content created to defame people, the subject of the deepfake has little to no ability to stop the use of their image. It has no way to challenge the situation. This lack of control over one’s digital identity is an ethical problem that, in these specific cases, needs to be addressed more broadly.

Deepfake technology poses serious new challenges to society’s reliance on visual proof. Videos and photos are taken as definitive proof in various legal contexts and societal contexts, but the alteration of evidence causes a collapse in the trustworthiness of evidence, so what is authentic and what is fake is becoming foggier, as this is psychological control of the people. Some people may be gaslit, in which their perceptions are made false as they see modified fake proof of their behavior or counterfeit conversations.

Global Legal Landscape: Playing Catch-Up

International frameworks to combat emerging digital challenges lag behind the pace of technological innovation. Regulators around the world are attempting to develop legal approaches to the new threat of deepfake technology. In response to the challenges regarding deepfakes, the European Union (EU) has begun the regulation of artificial intelligence (AI) technologies through the EU AI Act, which establishes definitions and consequences for high-risk AI systems.

In this context, deepfake technology may be classified and categorized as “high-risk” under certain conditions, in which case more stringent rules and data protections would govern the associated deepfakes. Enforcement challenges may remain, particularly when it comes to the cross-border regulation of deepfakes, but the act would, nonetheless, be the first attempt to limit the malicious use of deepfakes at an EU level.

In the United States, there is an increasing interest in the development of laws that punish the intentional fabrication and dissemination of deepfakes. Such legislation would greatly assist in the prosecution of individuals who use deepfake technology for the purposes of manipulating political discourse, revenge pornography, and financially motivated scams and fraud.

Much like the efforts of the EU, though, the enforcement of such laws is much easier said than done. As is the case with much of digital content, deepfakes can be produced in a single geographic location and, within a matter of minutes, be shared all around the world. This often leads to a situation in which perpetrators are virtually impossible to identify and, therefore, almost impossible to punish.

Regulating deepfakes is a clear case of having to balance the right to free speech with the obligation to protect individuals from wrongful treatment. On the one hand, the ability to conjure synthetic media can be justified as art, commentary, or satire. On the other hand, clearly, the ability to be manipulated in a harmful manner is an increasingly likely scenario in the modern world. The legislation that is likely to achieve the desired outcome best is the one that best preserves the unrestricted use of deepfake technology while simultaneously aiming to eliminate its destructive applications.

The proposed solutions to the use of deepfakes, such as privacy legislation to control the use of personal information within AI systems, digital watermarking for the authentication of original media, and deepfake detection systems based on AI technologies, are more than capable of answering the challenges posed by deepfakes.

Automated media authentication systems based on artificial intelligence are a promising solution to the problem of distinguishing between real and synthetic media. However, the deepfake problem would be better addressed with the solutions above, particularly new legislation, public policy, and increasing public knowledge and understanding of the issues surrounding deepfake technology.

The Use of Technology to Combat Deepfakes

These systems are powered by advanced algorithms that detect subtle visual and auditory anomalies that even the most astute human analysts may miss. For example, variations in synchronization, audio latency, pixel spacing within a video, and other similar phenomena may serve as indicators and sound an alarm that the material that is being analyzed has undergone some changes and may be fake. Tools such as these are a critical first step in restoring faith in digital media, even if they work in an increasingly sophisticated era of forgeries.

Machine learning is one of the most sophisticated strategies used in deepfake detection. Researchers teach AI models the difference between authentic media and fake media for them to learn the deepfake algorithms. Such tools can evaluate an individual’s biometric information, like the rate of blinking and movements of the muscles, which are usually odd in deep fake content. Besides, deepfake detectors can now learn and find the expression flaws that AI algorithms intentionally fail to replicate. Such tools have been adopted by Tech companies, social media platforms, and media houses to fight the spread of manipulated videos. Disinformation is a significant area of concern, and the tools offer a great initial response.

Marking media files enables the file origin and verification system. It allows a user to verify the source of a video or audio clip in its unaltered and unmodified form. This technology will not only slow the rampant spread of synthetic content but will also protect people against the unauthorized use of their image and likeness, as deepfake technology will be traceable to the origin and creators of digital forgeries.

Single tool detection will not be sufficient to address the negative impacts of deepfakes if not part of an overarching framework that includes legal and societal countermeasures. Just like cybersecurity is both a technological achievement and a user-educated defense, addressing deepfakes is the responsibility of the technology developers, lawmakers, and the public.

Lawmakers must strengthen data privacy legislation and be responsible in the deepfake technology space, while the rest of the technology industry must continue to evolve and enhance deepfake detection. There is a growing necessity that the public be educated on the perils of synthetic media and also be proactive in the protection of their digital identities.

Self-Protection in the Era of Synthetic Media

Limit personal content exposure on public platforms:

- Be selective in the photos, videos, and recordings shared online.

- Combat the oversharing of personal information by setting privacy restrictions that aim to minimize personal data exposure.

Enable two-factor authentication (2FA):

- Protect accounts, in particular those that are linked to personal or business dealings.

- Provides an additional safeguard, whereby unauthorized access is prevented, even if login credentials are hacked and acquired.

Learn how to manipulate the senses of sight and hearing:

- An example of a deepfake is a video exhibiting odd lighting, shadows, and sounds that aren’t appropriately matched.

- Learn and recognize the albeit subtle traces of manipulated content to avoid being victimized by a false narrative.

Synthetic or non-consensual content to be reported:

- Use the tools available on various platforms (Twitter, Facebook, YouTube, etc.) to flag fraudulent and falsified deepfake videos.

- Join the global movement to eliminate abusive content and increase protective awareness.

Be a responsible digital citizen:

- Undertake personal obligations and take steps to ensure position and digital self-protective measures.

- Vigilantly participate on a platform to eliminate or reduce the exploitation of deepfakes technology for malicious purposes.

Each person, by taking the above actions, will be able to protect their reputation while also taking a proactive step to the looming problem of deepfakes in the modern world.

Conclusion

In today’s world, Deepfakes serve as a threatening asset regarding privacy, verification, and moral principles of authenticity. They contain an edge not yet seen, and if left unscrutinized, will wreak havoc. Though technology currently does proffer potential solutions through AI deepfake detection and blockchain technology for verification, the rest of the solutions, however, lie outside the realm of technology, which will be necessary in a world of deepfakes.

The solutions lie in detailed and comprehensive law-making, relevant engineering creativity, and widespread public education. This will arm the ordinary people with the understanding of deepfakes, while also educating them as to the tools to defend their privacy and public presence online. With coalition efforts by the tech creators, legislature, and general people, the deepfakes dilemma can be tactically resolved.