As the AI application is observed in many fields of human life, one thought-provoking question is whether machines can have the sensation of emotions as humans do. In the past, AI systems were built primarily to carry out logical, data, and algorithmic operations. Yet, emotions are complex and multifaceted and found in nature. They are also socially embedded.

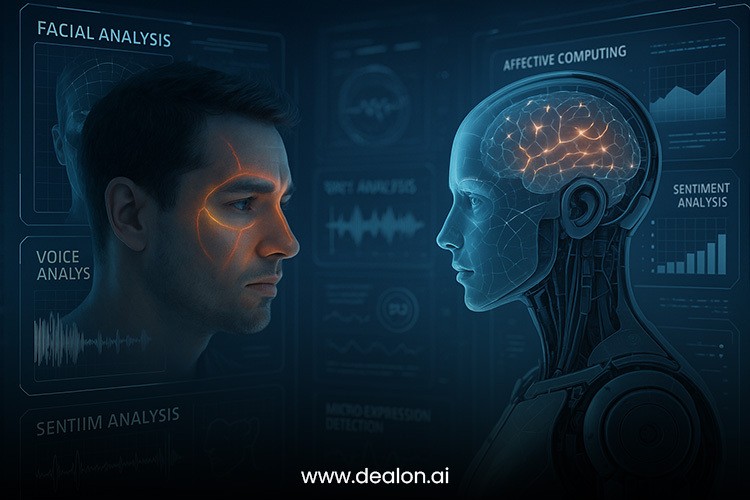

Thus, they have been one thing that scientists have never taught machines. Yet, advancements in affective computing and emotional AI are enabling machines to comprehend and even reproduce human emotions. While AI can interpret facial expressions, tones of voice, and even physiological indicators to gauge emotional states, can they really understand what it means to feel anything at all?

In this study, we will not only check if AI can comprehend emotions, but we will also find out if it can feel them. As we start using AI for mental health, customer service, and even relationships, we need to understand the expanding scope and limitations of AI’s emotional capabilities. Is AI capable of providing the same empathetic and tailored response as human beings? Will it forever be an approximation, a computer that processes our emotions and gets attached but does not feel like us?

In this article, we will see how AI and human emotions connect with each other. Moreover, we will also explore the science of emotional AI and how machines can enhance our emotional experience. Lastly, we will check out the ethical implications of depending on technology for emotional Intelligence. As artificial Intelligence develops further, understanding how AI affects our emotions will be determinant in how we build relationships with these machines and how these impact our social, psychological, and ethical fabrics.

Also Read: The Future of Work: Will AI Replace or Augment Human Workers?

The Science Behind Emotional AI: How Machines ‘Feel’

The field of affective computing is studying how AI interacts with human emotions as it becomes more popular. The goal of this field is to create systems that recognize, interpret, and respond to human emotions. The basic idea behind emotional AI is that emotions can be measured by looking at various external conditions, for instance, facial expression, voice, body language, plus heart rate or skin temperature. AI systems try to infer the emotional state of a person from happiness and anger to sadness, fear, and surprise by analyzing these signals.

Affective computing mainly makes use of facial recognition technology for its work. AI can see facial expressions and catch the micro-expressions we don’t see ourselves. For instance, a machine can pick up on slight movements in the edges of a person’s mouth or the shrinking of their eyes. This is useful because machines are trained to respond in a specific way whenever they detect these two situations. Likewise, a voice tone analysis can provide clues to the emotions people have. According to the pitch, pace, and volume of a person’s voice, machines can tell whether the person is speaking with frustration, joy, or sadness.

In addition, emotional indicators have become very valuable, particularly in biometric data, heart rate variability, body temperature, and even brain activity. With all these data inputs combined, AI systems can form a much better understanding of any person’s emotions at any time. For instance, in healthcare, emotional AI helps diagnose mental conditions. In customer care, chatbots help users by modifying their responses based on the customer’s feelings.

Work has already begun in the field. But emotional AI cannot feel like humans do. It lacks personal insight, inner mental conditions, or a degree of competence that is essentially human. AI looks through data and finds the patterns in it. But it does not do it with any emotions or experience. In other words, AI feels only at the physical level. It senses, understands, and responds to feelings, but does not have feelings.

An AI may realize that someone is angry if their face and voice suggest anger, but this does not mean that the robot feels anything. It just works on the signals and produces an appropriate output. AI can recognize emotions mentioned in text and visual stimuli. Another thing entirely is to experience those feelings. AI does not have an inner experience of empathy or emotional understanding. Instead, it uses algorithms designed to create a response that looks empathetic or emotionally appropriate.

Can Machines Empathize with Humans? The Limits of AI’s Emotional Intelligence

Empathy is considered one of the most complex and deeply human emotions, often being a pillar of emotional Intelligence. Empathy is one’s capacity to sense other people’s emotions. Additionally, it combines the ability to imagine what someone else might be thinking or feeling. Genuine empathy is more than just feeling how another person feels; it also involves knowing why the other person feels that way. This insight provides humans with the ability to connect with other human beings at a personal level and offer comfort or guidance.

While AI is capable of showing empathy to some extent, it is unable to truly understand it. Artificial Intelligence can scan your tone of voice or detect when you sound distressed, so it responds as if it cares. A customer service chatbot may say something like, “I can imagine how frustrating that must be,” or “I’m sorry to hear you’re upset”. This type of programmed response aims to be sympathetic. But the machine doesn’t really understand what these phrases mean, let alone what feeling frustrated represents.

The emotional capabilities of Artificial Intelligence are connected to data, which means AI expresses itself through data rather than intuition or experience. AI can learn to identify an angry or sad person, but it won’t know which stimuli caused this kind of reaction. A man can respond not just to the emotion of another. But also the context, the personal history, and the specifics of a situation. For instance, a friend might notice that a person is upset due to his recent breakup and offer to help him sort it out. Yet AI is only going to respond to the data that is given. This may be through facial expressions, tone of voice, or what is written.

Moreover, empathy involves a shared emotional experience. Often, humans can feel how other humans are feeling. They can see into that emotional landscape to some extent. Someone who has traveled to some difficult but lesser place with the expedition can bring some level of comfort to a fellow traveler. AI, by contrast, has no emotional experiences of its own. It processes emotional data but doesn’t actually feel sad, joyful, or fearful. AI simulators cannot think like humans, and so their empathy is simulated and lacks the depth and authenticity present in humans.

Although AI can have a functional kind of emotional support in areas such as mental health apps or customer service, nothing can replace the empathy that humans have for one another. In therapy, some equipment may be able to suggest choices or give automated emotional replies, but nothing could ever replace the human effect of a human therapist.

AI Can Help Us Heal – The Role of Machines

Over the years, Artificial Intelligence (AI) has been used in the Mental Health Sector for various things. The emergence of AI chatbots, therapists, and self-help platforms has proven to be an asset for persons dealing with mental health issues due to their accessibility and immediacy. Folks with anxiety, depression, stress, and other emotional problems are increasingly using avatars like Woebot and Replika. These systems use NLP and machine learning to use therapeutic conversations for providing solutions and are a source of emotional support in real-time.

The application of AI is on the rise in mental health care. This has many benefits. One of the most significant benefits is its accessibility. Many people experience a barrier to receiving therapy because of scheduling appointments, logistics, and more. Tools that utilize AI are always accessible. Thus, you can get mental health support any time you may need it.

Easy access to the Internet has fostered an immediate kind of help that is especially useful in times of crisis or when a person is unable to access therapy due to place, time, or money. Those in need of early help can access coping strategies and emotional support through AI tools. This may assist in preventing further development of mental health issues.

Furthermore, the AI systems are designed to be nonjudgmental so that people can feel anonymous and safe to open up about how they think. For lots of people, opening up to a machine seems more straightforward than talking to a human. This is especially true for those who have experienced shame or stigma. As AI keeps advancing, these systems have become better at spotting patterns in user behaviour, tailoring responses based on individual needs, and offering personalized strategies to improve mental health.

However, these AI-powered mental health tools have limitations too. A key gap is in the level of emotional understanding and the capacity to connect on a more human level. AI can only imitate sympathy and can only provide answers that are limited to the algorithms that have been programmed. It does not have the nuances, depth, and richness of human touch that form in a therapeutic rapport.

The personal experience and professional training of the human therapist also allow him to pick up on cues that are emotional and psychological, and even the cues that are cultural. A machine does not process these as a human therapist would. For instance, AI might identify that a person is anxious based on their speech. However, it does not understand the context of the anxiety. Likewise, it also fails to grasp the complexities of mental health conditions in general.

The human connection given by the licensed professional therapist is the most essential factor in creating trust and helping one to feel safe so as to share their feelings. While AI can provide strategies to cope, it cannot take up the work of therapy, which involves a whole lot of reflection, self-discovery, and coping strategies that stem from the work you do on yourself.

The Ethical Implications of Trusting AI with Our Emotions

AI, as a tool that is often used in the management of certain types of mental illnesses, is undoubtedly not new. From which we can conclude that the answer to the dilemma is obviously no. Although we need to completely trust the machines, often seeing the human drama unfolding each day, we still trust our Intelligence as a race never to have a ‘robot attack’. Machines can process and respond to data, but they cannot ‘truly understand’ what they are doing. So, it is essential to ask ourselves whether it is correct or safe to depend on AI for emotional support.

One of the most significant ethical issues is privacy and consent. Technology capable of analyzing emotion — be it a person’s voice tone, facial expression, or even physiological response — demands access to exceedingly personal information. How is this data being used, stored, and protected? AI can assess a person’s emotional state, but there is a risk that companies will use it for their own advantage.

This is going to target their ads or sway politics. For example, AI could take advantage of a person’s emotional vulnerabilities in a way that subtly influences what they buy or how they vote. It is, therefore, important for AI developers to develop stringent protocols around data privacy to ensure emotional data is handled safely and adequately.

This could result in humans bonding less with their peers, leading to further isolation. AI may help human clinical care, but there is a risk that some people may choose to turn to machines for emotional relief instead of going to seek help in person from a professional. Artificial Intelligence cannot substitute the deep personal connection of psychotherapy. Using therapy robots could make people rely greatly on machinery. It might reduce social interaction and make people lack empathy. Moreover, one might not undergo the personal growth one undergoes with regular therapists.

Moreover, it is essential to avoid confusion about AI’s role in emotional support. Never let machines take the job of trained professionals in the treatment of severe mental health conditions. Although AI plays an essential part in early intervention, it must not be interpreted as a replacement for qualified mental health care providers, especially in the context of complex or severe cases of mental health disorders. The use of something should not replace human involvement and Intelligence; this is why ethical constructions must be put in place.

They must have a clear ethical framework to define the right ways to use AI tools in mental health. Public trust will be undermined unless those creating these frugal innovations work to ensure transparency in how the systems work. The legislation should make sure that AI is not manipulated for the commercial and political interests of vulnerable people. Human oversight must be put into place so that AI mental health services do not harm the individuals being treated. Machines must not replace human therapist interactions.

Conclusion

Although artificial Intelligence can significantly enhance mental health care, it is essential to establish it as a supplement rather than a replacement. While AI can step in to calm therapy sessions and even provide mental support, it cannot take the place of a human therapist. It is important to use AI practices that are ethical. There should be privacy, less reliance on technology, and consent. In the future, it is believed that AI technology will be used along with conventional mental health care to make it more accessible and efficient while ensuring it does not replace the priceless human touch and professionalism.