AI has become much more than a computer program. It influences human thought, feeling, and behavior. With AI systems playing a greater role in our everyday life that makes decisions for us, influences our preferences, and simulates social interaction, the relationship between humans and machines has become impossible to overlook. According to the New York Times, “the psychology of AI examines the image people have of artificial intelligence, how much they trust it, and how they feel about intelligent systems.”

This up-and-coming area of study explores how people adapt to AI and how AI subtly adapts to people through learning and personalization. After becoming familiar with the human-like interactivity of AI, it may be easy to build systems that are economically efficient, psychologically acceptable, and ethically sound. On top of processing operations, AI can do more. When technology and human psychology intersect, it becomes a cognitive partner that affects perception, decision-making, and social experience.

Also Read: The Power of AI in Predictive Analytics: What You Need to Know

The Cognitive Foundations of Human–AI Interaction

Cognitive processes that influence how people read agency, intention, and intelligence shape human–AI interaction. The human brain is hardwired to discover patterns and locate meaning even in non-humans. Users tend to attribute traits that have given rise to the popular phrase, “The Algorithm has a personality”. AI using natural language, or how AI adapts to users’ preferences, or how it mimics emotionally relatable expressions, activates familiar cognitive schemas. Because of that, engagement becomes intuitive, and users easily accept AI-generated insights without the need for critical thinking.

The shared mindsets come with both benefits and dangers. On the one hand, intuitive interfaces lessen the strain on our brains, allowing users to comprehend information faster and make better decisions. On the other hand, seeing too much human-like behavior from AI can damage their limits and get people to rely on their outputs too much.

In this way, effective design must strike a balance between familiarity and clarity. In other words, good design must make sure that AI systems support human cognition. Nonetheless, the design shouldn’t create a false impression about the system’s actual capability. By aligning system behavior with human expectations, we can promote productive cooperation rather than dependence.

Trust, Transparency, and Perceived Intelligence

Trust is the psychological bedrock of sustainable human–AI relationships. People assess AI systems based not just on performance, but reliability and coherence. This output is consistent and reliable, while the other one is not reliable, and confidence is undermined. The clarity of AI decision pathways is essential for transparency to encourage users to trust AI. When users understand the reasoning behind an AI outcome, they’re more likely to agree with and follow through with the outputs.

Beliefs about intelligence alter trust, too. According to studies, AI systems that admit uncertainty are more likely to be believable than those that claim absolute certainty. This mirrors the pattern of communication used by humans. By making complex calculations easier to follow, explainable AI frameworks are aiding this perception. Nevertheless, transparency must be implemented carefully: if the technical details are overloaded, users may be more likely to lose trust rather than gain trust.

Trust in AI ultimately derives from a balance of our understanding of its workings, its transparency and design, and its accuracy. Systems that do not ignore human psychology while genuinely showing their limits will stand a better chance of long-term acceptance. As AI becomes more embedded in decision-making, we need to build trust in a way that ensures humans and their judgment will rule, rather than AI.

Emotional Engagement and Social Dynamics

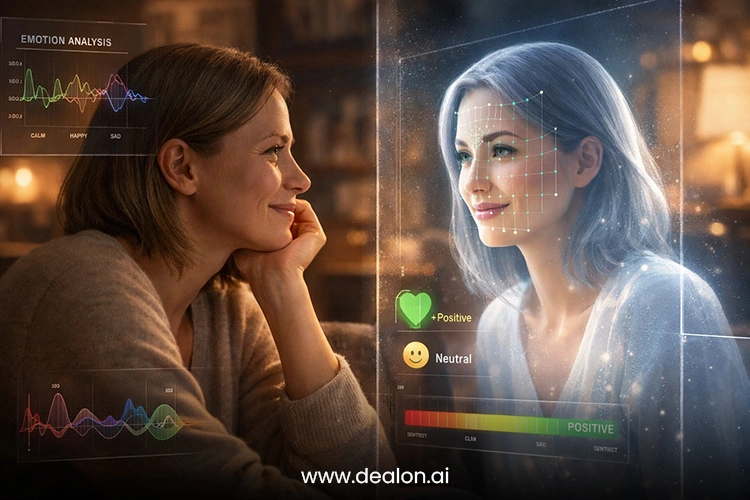

AI’s emotional awareness is changing how humans perceive interaction with artificial avatars. Emotionally responsive AI can modify its responses in light of information received about the user based on the analysis of a user’s vocal tone, facial expressions, and language patterns. This functionality has extended the AI persona from just being a functional object to a social actor capable of providing companionship, advice, and personalized support. In mental health and education, these can help with comfort and motivation, and help keep them engaged over time when resources are low.

Nevertheless, the emotional AI social impacts are complicated. As users develop an attachment with these machines, the difference between real and artificial empathy becomes more ambiguous. Relying on AI for long periods helps one to expect emotional affirmation from AI, which can affect real-life interactions negatively. Emotional manipulation has ethical implications, mainly when AI systems are used on vulnerable populations. Hence, when designing emotionally intelligent AI, it is essential to calibrate it so that the AI enhances wellbeing and does not lead to dependency or exploit the psychologically vulnerable.

Behavioral Influence and Decision-Making

Artificial intelligence has a significant impact on humans through algorithms that recommend products to other consumers. The AI learns from user interactions in order to make content and suggestions more personalized for users so that they come out more effortlessly. When users are presented with limited options, it becomes easier for them to make choices, decision-making becomes easier, and it makes the user happy.

However, an individual’s psyche is critically affected by such influence. Repeated exposure to tailored suggestions can entrench pre-existing ideologies and inclinations, limiting the range of cognitive experiences as a result. When this happens, confirmation bias can get stronger and influence behavior in subtle ways. In addition, predictive mechanisms can manipulate users’ options; phrasing them a certain way raises concerns about choice and autonomy.

Decision support that relies on AI must enhance agency to be ethical and appropriate by explaining how recommendation systems work, allowing them to be configured, and providing many information paths. When designers understand the psychological mechanisms of influence, they can design AI that encourages reflective decision-making rather than passive compliance. At the end of the day, responsible AI is a cognitive assistant, augmenting human judgment while ensuring the freedom of choice and integrity of the mind.

The Future of Human-Centered AI

The development of artificial intelligence is becoming more and more aware of the fact that xenophobia will fall short of just having technological sophistication. The objective of Human-centred AI is to build intelligent systems that are not only contextually and ethically aware but also sensitive to human emotion and cognitive bias. When human-centered design and AI are merged, the future systems developed will be the ones that will adapt to all your needs and respond with a human touch.

Moreover, rather than making the decisions for you, they will facilitate decision-making. Transparency, explainability, and trust will let users understand and interact meaningfully with intelligent systems as technological advances are now integrating environmental science into their designs by the use of Artificial Intelligence to enhance creativity, autonomy, and social wellbeing while protecting future human involvement in the automated world.

Conclusion

The future of AI lies in understanding the human psyche and working together with it. Human-centered design creates intelligent systems that empower us and that we can trust inclusively and responsibly. When innovation and human values converge, AI takes the transformative form that will ultimately benefit technological as well as human progress.